AI Liability: Who Gets Sued When Your Bot Goes Rogue?

When AI makes a mistake, it’s your legal problem. Learn who’s liable when automation crosses the line.

Published under The Legal Hat on HatStacked.com

The moment your AI assistant “accidentally” spams your customer list with cat memes, you’ll wish you had read this post first.

The problem no one’s ready for

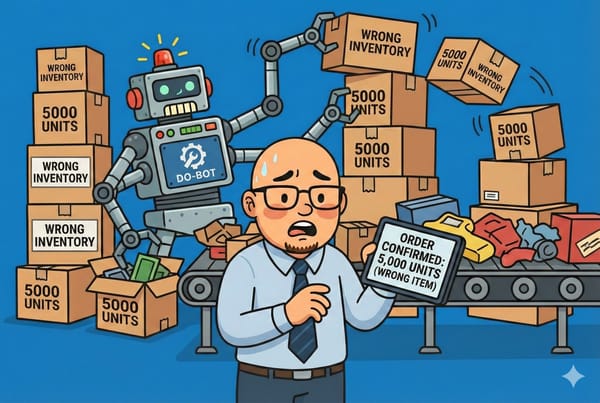

AI is doing more work than ever for small businesses. It’s writing emails, summarizing contracts, analyzing data, and even making hiring recommendations. But what happens when that same AI makes a costly mistake?

Say your chatbot gives out a coupon that never expires. Or your AI copywriter plagiarizes. Or your automated invoice system charges the wrong amount to fifty customers in one day. Who’s responsible when things go sideways?

Spoiler: It’s you. Almost always you.

Why “the AI did it” isn’t a defense

There’s a myth floating around that artificial intelligence is some kind of legal black hole, a sentient intern you can blame when everything goes wrong. Unfortunately, the law doesn’t see it that way.

AI is not a legal entity. It can’t sign a contract, hold responsibility, or get sued. It’s a tool, not a person. When you use an AI model in your business, you assume responsibility for its output, just as you would for a spreadsheet formula or an employee’s email.

If your AI tool defames someone, violates copyright, leaks private data, or breaks consumer law, regulators and courts will look at the human decision makers behind it. “The algorithm did it” will not fly.

Where the lawsuits are already happening

While no small business owner has been dragged to court for an AI mishap yet, the legal storm clouds are forming. Here are some early examples that set the stage:

- Copyright infringement: Writers and artists have sued AI companies for scraping their work to train models. If your business uses AI-generated content based on copyrighted data, you could inherit that risk.

- Defamation: When an AI chatbot falsely accused a radio host of fraud, lawyers quickly debated who would be liable: the user, the AI company, or both.

- Employment discrimination: Automated hiring tools have been accused of bias. If you rely on AI to screen candidates and it filters out protected groups, you’re the one on the hook.

The takeaway: your business’s use of AI could easily fall under existing laws. There’s no AI exception clause in the legal system.

The contracts are written to protect them, not you

If you think the companies selling AI tools will share your legal risk, think again. Read the terms of service for nearly any major AI platform and you’ll find language like this:

“You are solely responsible for any content generated using the service.”

That means if your AI output leads to a legal claim, it’s your problem, not theirs. Even the most trusted providers—OpenAI, Google, Anthropic—explicitly disclaim responsibility for how users deploy their models.

AI vendors often include indemnity clauses stating that you will defend them if someone sues over your use of their product. In other words, you might be paying their legal bills too.

Before integrating any AI tool into your workflow, make it standard practice to actually read the terms and conditions. Yes, all of them. Your lawyer will thank you.

The difference between negligence and misuse

The law draws a thin but important line between AI negligence and AI misuse.

- Negligence happens when you fail to reasonably supervise your AI system. For example, if you use an AI invoice tool that miscalculates taxes for months and you never check, that’s negligence.

- Misuse occurs when you intentionally or carelessly deploy AI in a way that causes foreseeable harm, like using an image generator to create fake product reviews.

Negligence is bad. Misuse is worse. Both are your responsibility.

What “reasonable supervision” looks like

Courts tend to favor business owners who can prove they made a reasonable effort to manage their AI tools. Here’s what that looks like in practice:

- Human review. Always have a person approve customer-facing AI output, such as marketing copy, chat responses, or pricing updates.

- Audit trails. Keep logs of what AI systems do, when they do it, and who approved it.

- Training data transparency. Know what data sources your AI relies on. If the vendor won’t tell you, that’s a red flag.

- Security checks. Protect sensitive data that passes through AI systems. Encryption, access control, and deletion policies matter.

- Policy documentation. Write an internal AI policy outlining how, when, and by whom AI can be used.

Those steps won’t make you lawsuit-proof, but they’ll show regulators and insurance companies that you’re not asleep at the keyboard.

The insurance question no one asks

Few business insurance policies cover AI-related risks yet. General liability, cyber, and professional indemnity policies were written long before large language models showed up.

If you rely on AI for customer interaction or financial transactions, call your broker and ask directly:

- Does our policy cover claims from AI-generated errors?

- Would it cover reputational damage or defamation caused by an AI system?

- Do we need a cyber or technology endorsement?

Insurers are already experimenting with “AI malpractice” coverage, but it’s early. Until that becomes mainstream, your best defense is process documentation.

What about open-source and DIY AI?

Open-source AI models feel like a loophole, but they come with risks of their own. Many licenses specifically state that the creators take no responsibility for what the model produces.

If you modify or fine-tune an open-source AI and then use it commercially, you become its developer in the eyes of the law. That means you inherit liability for everything it generates.

Running your own AI sounds empowering until you realize you’ve just promoted yourself to “Chief Risk Officer.”

The gray area of “AI advice”

Many small businesses now use AI to generate suggestions, whether for contracts, HR decisions, or financial planning. The line between “advice” and “execution” is blurry.

If your AI drafts a contract that misses a key clause and you use it without legal review, you’re still responsible for that omission. AI doesn’t have a license to practice law or accounting, and using its output as if it did is a quick way to get burned.

Before you rely on AI for advice, use it as a brainstorming partner, not a replacement for professional expertise.

Related: The Small Business Guide to AI in 2025

Related: The Small Business Guide to AI in 2025

The ethical layer matters too

Beyond liability, there’s reputation. Customers can forgive an honest mistake, but they won’t forget an AI that breaks their trust.

Transparency builds goodwill. Tell customers when they’re interacting with AI. Offer a clear way to reach a human if something goes wrong. And most importantly, train your staff to recognize when AI shouldn’t be involved at all.

When in doubt, remember: automation saves time, but accountability earns loyalty.

The bottom line

AI can do remarkable things for small businesses, but it’s not a shield against responsibility. When your bot goes rogue, you’re the one holding the bag.

Treat AI like any other employee: train it, supervise it, audit it, and make sure it plays nicely with others. The goal isn’t to avoid AI, it’s to use it without becoming tomorrow’s headline.

If you wouldn’t let a new hire send emails unsupervised on their first day, don’t let a machine do it either.

Disclaimer

This post is for educational purposes, not legal advice. Seriously, don’t take legal advice from a cartoon guy in a tie. Laws vary by state, country, and level of chaos. If you think your AI might actually get you sued, call a real attorney before you call us. We write about AI liability, we don’t cover it.